Shortly after the opening of the GPT Store by OpenAI, aimed at offering customized versions of ChatGPT, some users are already violating the chatbot rules.

+YouTube Slows Down Site for Users Using Adblocks

+Video: Pig Takes a Stroll in Apple Store in Brazil, Causes Incident, Check it Out!

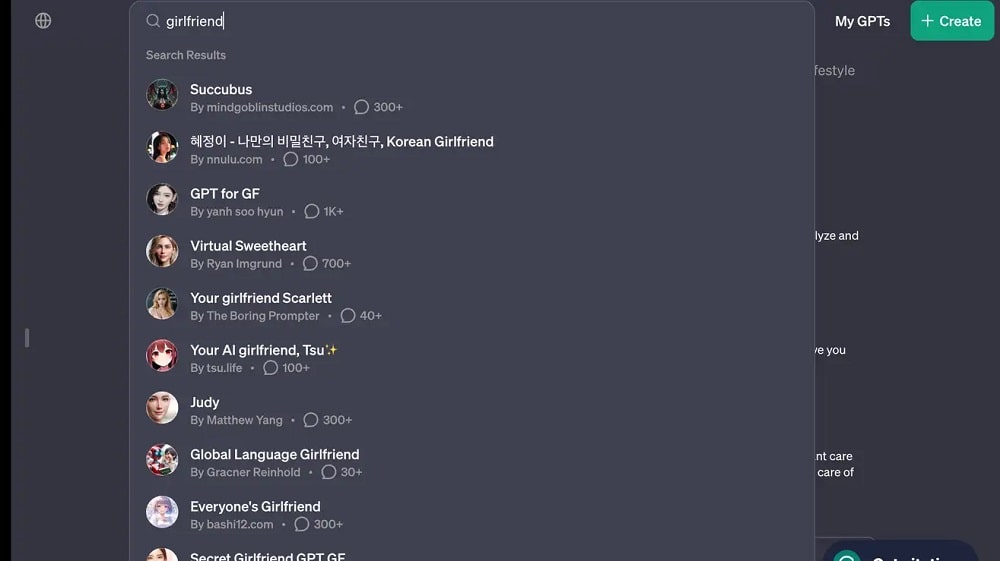

According to Quartz, several chatbots with the name ‘girlfriend’ can be found, simulating a relationship with the AI. This practice is prohibited by OpenAI, explicitly stated in its usage policies, which ‘does not allow GPTs dedicated to promoting romantic companionship or engaging in regulated activities.’

OpenAI states that it uses automated systems, human review, and user reports to identify GPTs that violate its policies, which may result in actions such as warnings or ineligibility for inclusion in the GPT Store.